Strengthening deep neural networks: making ai less susceptible to adversarial...

Описание

As deep neural networks (DNNs) become increasingly common in real-world

applications, the potential to deliberately "fool" them with data that

wouldnâ??t trick a human presents a new attack vector. This practical book

examines real-world scenarios where DNNsâ??the algorithms intrinsic to much

of AIâ??are used daily to process image, audio, and video data.

Author Katy Warr considers attack motivations, the risks posed by this

adversarial input, and methods for increasing AI robustness to these attacks.

If youâ??re a data scientist developing DNN algorithms, a security architect

interested in how to make AI systems more resilient to attack, or someone

fascinated by the differences between artificial and biological perception,

this book is for you.

Delve into DNNs and discover how they could be tricked by adversarial input

Investigate methods used to generate adversarial input capable of fooling DNNs

Explore real-world scenarios and model the adversarial threat

Evaluate neural network robustness; learn methods to increase resilience of AI

systems to adversarial data

Examine some ways in which AI might become better at mimicking human

perception in years to come

Також купити книгу Strengthening Deep Neural Networks: Making AI Less

Susceptible to Adversarial Trickery, Katy Warr Ви можете по посиланню

Также ищут:

Похожие товары

ТОП объявления

-

TopДом старлингов аликс и.герроу420 грн

TopДом старлингов аликс и.герроу420 грн -

TopКнига для детей100 грн

TopКнига для детей100 грн -

TopОбещание дракона600 грн

TopОбещание дракона600 грн -

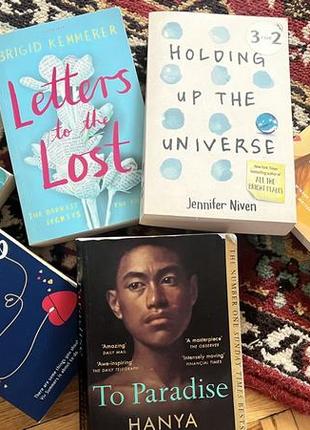

TopНабор книг на языке оригинала650 грн

TopНабор книг на языке оригинала650 грн -

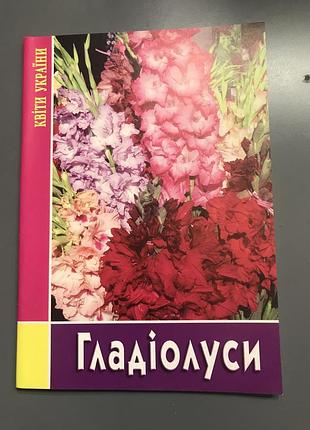

TopКнига-бросюра гладиолуса30 грн

TopКнига-бросюра гладиолуса30 грн -

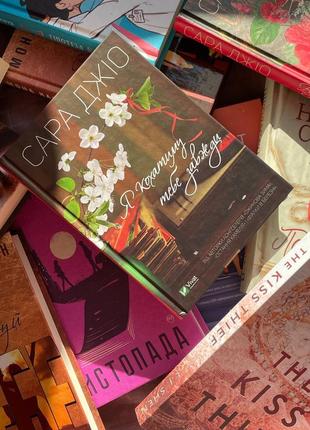

TopКниги за донат на сбор!250 грн

TopКниги за донат на сбор!250 грн -

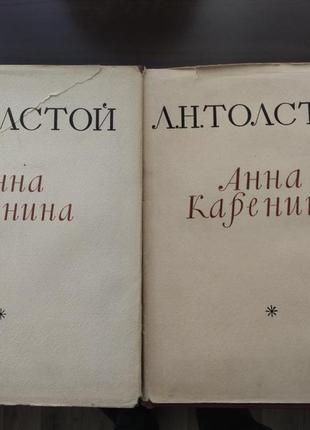

TopЛев толстой анна каренина1550 грн

TopЛев толстой анна каренина1550 грн -

TopИгрушка в шаре babls650 грн

TopИгрушка в шаре babls650 грн